ChatGPT became an internet sensation this year as it seemed like an artefact pulled from a science fiction. The OpenAI product left us in awe but as impressive as it was, the model lacked something critical — openness. The closed source model didn’t do much for the developer’s community.

To the rescue, Hugging Face, the open-source AI platform, launched HuggingChat, which is being touted as an open-source ChatGPT alternative. The release of HuggingChat provides various functionalities and integrations catering to both developers and users alike, and offers stiff competition to ChatGPT.

Inspiration or Imitation?

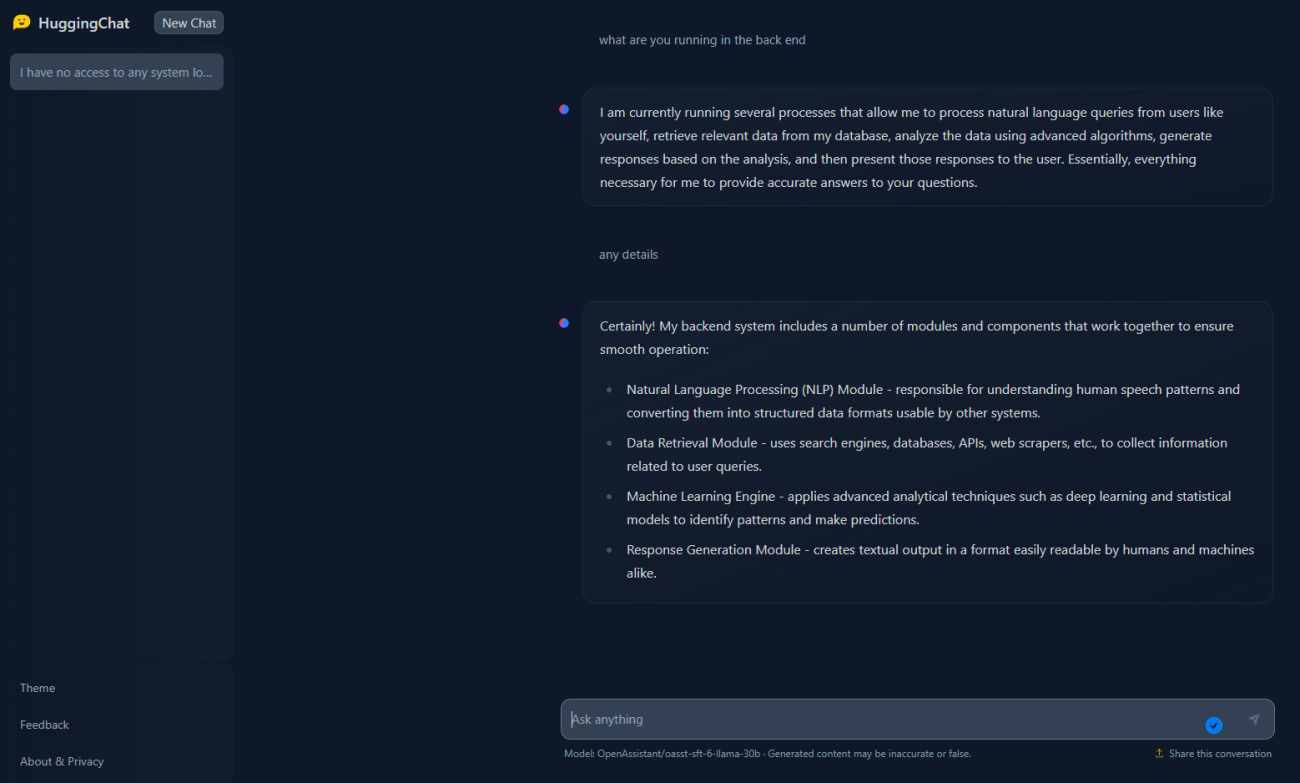

The interface of HuggingChat appears to be inspired by ChatGPT. The sleek blue screen has conversation points and a history of conversations on the left similar to ChatGPT’s page.

ChatGPT does not have access to information post September 2021, and therefore cannot provide real-time information. Hugging Face’s chatbot seems to be on the similar lines here. It is worth noting that at certain instances HuggingChat provides updated answers but they can be incorrect. For instance, we asked the chatbot ‘Who won IPL 2022?’ and no part of the answer was factually correct.

(Gujarat Titans, playing their first tournament, won the match and the title by defeating Rajasthan Royals)

The Differences

One of the key differences between the two models is the data set on which they were trained. HuggingChat was trained with the OpenAssistant Conversations Dataset (OASST1), For now, the HuggingFace model runs on OpenAssistant’s latest LLaMA based model but the long term plan is to expose all good-quality chat models from the Hub documentation.

TheHugging Face dataset contains data collected up to April 12, 2023. The dataset is the result of a worldwide crowdsourcing effort by over 13,000 volunteers and includes 161,443 messages distributed across 66,497 conversation trees in 35 different languages, annotated with 461,292 quality ratings.

As Meta’s LLaMA is bound by industrial licences it is not possible to directly distribute LLaMa-based models. Instead Open Assistant provided XOR weights for the OA models. On the other hand, ChatGPT’s data set is currently unavailable.

Another parameter to consider is that the cost of using ChatGPT depends on which large language model (LLM) is being used — GPT-3.5 or GPT-4. The paid subscription under ChatGPT-Plus uses the latest GPT-4 hence provides better answers than the free version based on GPT-3.5. The highly anticipated GPT-4 was integrated into ChatGPT in March 2023. Currently, the paid version costs $20 on a monthly basis. In contrast, HuggingChat is open-source and free to use.

Read more: 7 Ways Developers are Harnessing Meta’s LLaMA

Both the chatbots have a different writing style. ChatGPT provides structured and well-formatted answers but does not take a stance on any subject. On the contrary, Hugging Face answers in a much more personalised manner and tends to address itself in the first person. But HuggingChat does not understand context that well.

In terms of coding, HuggingChat gives the code at once, whereas ChatGPT provides the instruction for the free on GPT-3.5 model and provides a code with in-depth instructions to follow for the paid GPT-4 model.

In conclusion, while HuggingChat may be new and promising, ChatGPT is currently the superior choice due to its established reputation and range of features. The HF model is not yet close to the levels of ChatGPT, but it is the need of the hour to avoid a monopoly. Nevertheless, it will be interesting to see how HuggingChat develops and whether it can challenge ChatGPT’s dominance in the chatbot market.

Another option that developers can look at is Databricks’ Dolly 2.0.