Google DeepMind last week released AlphaCode 2, an update to AlphaCode, along with Gemini. This version has improved problem-solving capabilities for competitive programming. Last year when AlphaCode was released, it was compared to Tabnine, Codex and Copilot. But with this update AlphaCode definitely stands way ahead.

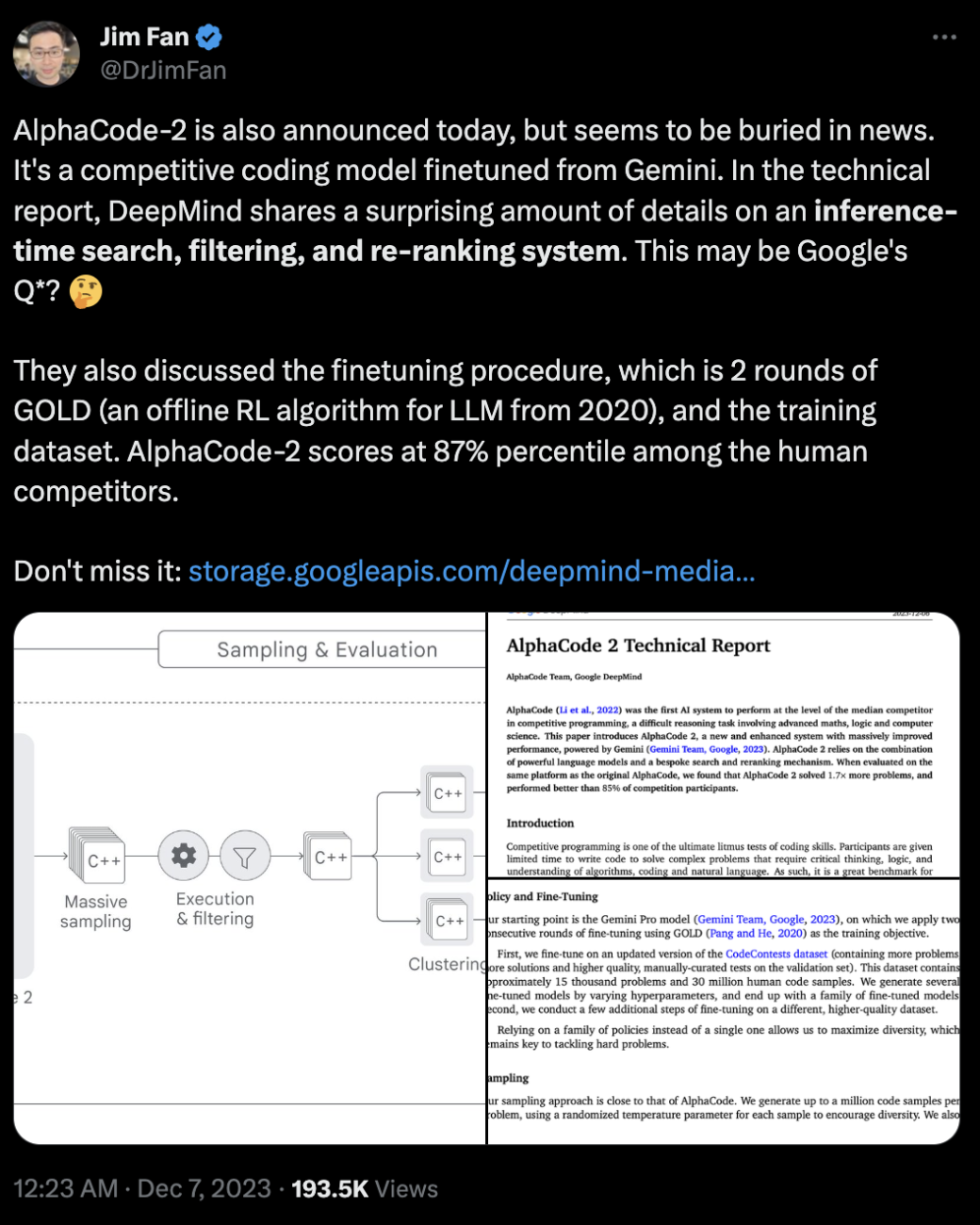

AlphaCode 2 approaches problem-solving by using a set of “policy models” that produce various code samples for each problem. It then eliminates code samples that don’t match the problem description. AlphaCode 2 employs a multimodal approach that integrates data from diverse sources, including web documents, books, coding resources, and multimedia content.

This approach has been compared to the curious Q* from OpenAI. Instead of being a tool that regurgitates information, Q* is rumoured to be able to solve maths problems it has previously not seen before. The technology is speculated to be an advancement in solving basic maths problems, a challenging task for existing AI models.

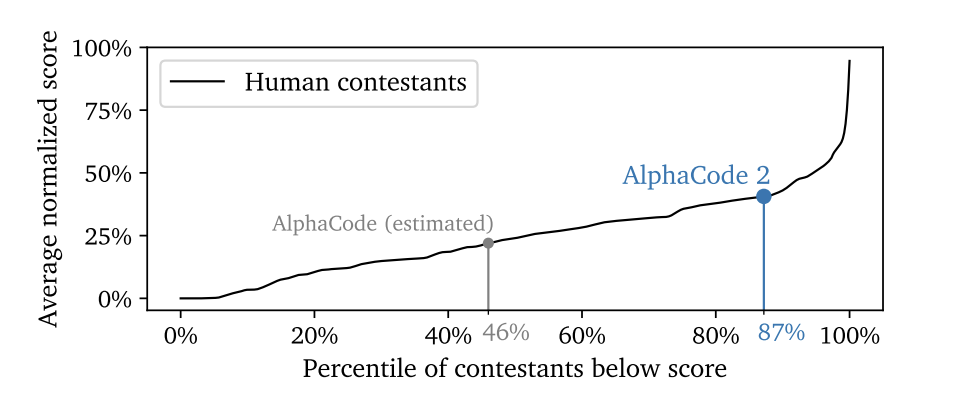

Now while Q* is only a speculation, AlphaCode performed better than 85% of competitors on average. It solved 43% of problems within 10 attempts across 12 coding contests with more than 8,000 participants, doubling the success rate as the original AlphaCode’s success rate was 25%.

However, like any AI model, AlphaCode 2 has its limitations. The whitepaper notes that AlphaCode 2 involves substantial trial and error, operates with high costs at scale, and depends significantly on its ability to discard clearly inappropriate code samples. The whitepaper suggests that upgrading to a more advanced version of Gemini, such as Gemini Ultra, could potentially address some of these issues.

What sets AlphaCode 2 apart

The AlphaCode 2 Technical Report presents significant improvements. Enhanced by the Gemini model, AlphaCode 2 solves 1.7 times more problems and surpasses 85% of participants in competitive programming. Its architecture includes powerful language models, policy models for code generation, mechanisms for diverse sampling, and systems for filtering and clustering code samples.

To reduce redundancy, a clustering algorithm groups together code samples that are “semantically similar.” The final step involves a scoring model within AlphaCode 2, which identifies the most suitable solution from the largest 10 clusters of code samples, forming AlphaCode 2’s response to the problem.

The fine-tuning process involves two stages using the GOLD training objective. The system generates a vast number of code samples per problem, prioritising C++ for quality. Clustering and a scoring model help in selecting optimal solutions.

Tested on Codeforces, AlphaCode 2 shows remarkable performance gains. However, the system still faces challenges in trial and error and operational costs, marking a significant advancement in AI’s role in solving complex programming problems.

When compared to other code generators, AlphaCode 2, unlike its counterparts, shows a unique strength in competitive programming. On the other hand, GitHub Copilot, powered by OpenAI Codex, serves as a broader coding assistant. Codex, an AI system developed by OpenAI, is particularly adept at code generation due to its training on a vast array of public source code.

In the emerging field, other notable tools like EleutherAI’s Llemma and Meta’s Code Llama bring their distinct advantages. Llemma, with its 34-billion parameter model, specialises in mathematics, even outperforming Google’s Minerva. Code Llama, based on Llama 2, focuses on enabling open-source development of AI coding assistants, offering a unique advantage in creating company-specific AI tools.

AlphaCode 2 has a different approach compared to other AI coding tools. It uses machine learning, code sampling, and problem-solving strategies for competitive programming. These features are tailored for complex coding problems. Other tools like GitHub Copilot and EleutherAI’s Llemma focus on general coding help and maths problems.

A Close Contest

For OpenAI, Q* represents a significant advancement in AI capable of solving maths problems it hadn’t seen before. This breakthrough, involving Sutskever’s work, led to the creation of models with enhanced problem-solving abilities.

However, the rapid advancement in this technology has raised concerns within OpenAI about the pace of progress and the need for adequate safeguards for such powerful AI models.

While both AlphaCode 2 by Google DeepMind and the speculated Q* represent significant advancements in AI, they are not yet widely available to the public.