In one of our previous articles, we had an introduction to the Doc2Vec model which is an important model for document embedding. The document embedding technique produces fixed-length vector representations from the given documents and makes the complex NLP tasks easier and faster. There are different variants of the Doc2Vec model and Distributed Bag-of-Words DBOW is one of them which is better among its peers. In this article, our discussion is focused on document embeddings using the DBOW model with a hands-on implementation with Gensim. The major points to be discussed in the article are listed below.

Table of contents

- What are document embeddings?

- What are Doc2Vec models?

- Distributed Bag-of-Words (DBOW)

- Implementing DBOW using Gensim

Let’s start with understanding document embeddings,

What are document embeddings?

Beyond practising when things come to the real-world applications of NLP, machines are required to understand what is the context behind the text which surely is longer than just a single word. For example, we want to find cricket-related tweets from Twitter. We can start by making a list of all the words that are related to cricket and then we will try to find tweets that have any word from the list.

This approach can work to an extent but what if any tweet related to cricket does not contain words from the list. Let’s take an example of any tweet that contains the name of an Indian cricketer without mentioning that he is an Indian cricketer. In our daily life, we may find many applications and websites like Facebook, twitter, stack overflow, etc which use this approach and fail to obtain the right results for us.

To cope with such difficulties we may use document embeddings that basically learn a vector representation of each document from the whole world embeddings. This can also be considered as learning the vector representation in a paragraph setting instead of learning vector representation from the whole corpus.

Document embedding can also be considered as an approach of discrete approximation of word embeddings. Since approximation or learning the vector representation of word embedding converts the whole corpus into vectors we find difficulties in establishing the contextual relationship between the words in vector form. Extracting small corpus and converting them into vector representation gives a scope of establishing the contextual relationships between words.

For example, homonyms words can have different contexts in different paragraphs and for a simple vector representation, it is difficult to differentiate between different meanings. While discrete the whole corpus paragraph-wise or sentence-wise and then generating vector representation of discrete corpus can give us more meaningful vector representation.

What are Doc2Vec models?

As we have discussed above, document embeddings can be considered as discrete vector representations of word embeddings. In the field of document embedding, we mainly find the implementation of the Doc2Vec model for helping us in making document embedding. In a sum-up of the whole theory behind Doc2Vec, we can say that Doc2Vec is a model for vector representation of paragraphs extracted from the whole word embedding or text documents. A detailed explanation of the Doc2Vec model can be found in this article.

We can also say Doc2Vec models are similar to the Word2Vec models. While talking about the vector representation of words in Word2Vec models we contextualize words by learning their surroundings and the Doc2Vec can be considered as vector representations of words while there is the addition of context of a paragraph.

Also, Doc2Vec models have two variants similar to Word2Vec:

- Distributed memory model

- Distributed bag of words

These variants also have similarities with variants of Word2Vec. The distributed memory model is similar to the continuous bag of word models and the distributed bag of words is similar to the skip-gram model. In this article, we are focused on a distributed bag of words model. Without wasting so much time let’s move towards the introduction of a distributed bag of words model.

Distributed Bag-of-Words (DBOW)

The Distributed Bag-Of-Words (DBOW) model is similar to the skip-gram variant of word2vec because this also helps in guessing the context words from a target word. The difference between the distributed bag of words and the distributed memory model is that the distributed memory model approximates the word using the context of surrounding words and the distributed bag of words model uses the target word to approximate the context of the word. When talking about the difference between a distributed bag of words and skip-gram models, the skip-gram model uses the target word as input whereas a distributed bag of words includes paragraph ID as input to predict randomly sampled words from the document. The below figure explains the working of the distributed bag of words model:

In this image, we can see that a paragraph ID is used for words that are randomly sampled from the paragraph’s word embeddings. By starting from paragraph ID it predicts a number that combines the skip-gram model. In many comparisons, we can find that the distributed bag-of-words models produces better results than the distributed memory models. Let’s see how we can implement a distributed bag of words model.

Implementing DBOW model using Gensim

To implement a distributed bag of words model we are going to use the python language and gensim library. A detailed introduction to the Gensim library can be found here. We can install the library using the following lines of codes.

!pip install --upgrade gensim

Now let’s move forward to the next step where we are required to import some modules.

from gensim.test.utils import common_texts

from gensim.models.doc2vec import Doc2Vec, TaggedDocumentUsing the above lines of codes, we called a sample data named common_text and model Doc2Vec. We have also imported a module TaggedDocument so that we can process our data as per model requirements. Let’s see how the data is:

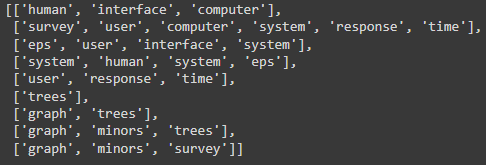

pprint(common_texts)

Output:

Here we can see that we have 9 sentences in the sample data. Or we can consider them as paragraphs because the Doc2Vec model works on paragraphs. Let’s process the data set and provide paragraph id using the TaggedDocuments module.

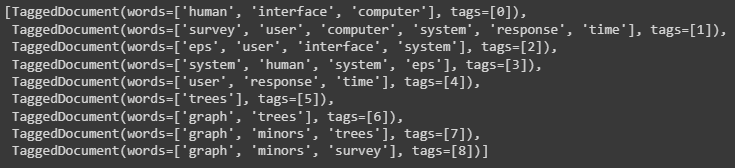

documents = [TaggedDocument(doc, [i]) for i, doc in enumerate(common_texts)]

pprint(documents)

Output:

Here we can see tags with the words in our tagged documents. Let’s train our model on the document.

model = Doc2Vec(documents, vector_size=5, window=2, min_count=1, workers=4, dm=0)

In the above code, we have instantiated a model with tagged document, feature vector of dimensionality 5, the distance between the current and predicted word in a sentence is 2, and 4 worker threads to train the model. With this, we have ignored words with a frequency lower than 1. One thing that is noticeable here is if dm = 0 in instantiation then only we can utilize the distributed bag of words model. Let’s check the details of the models.

model.build_vocab(documents)

print(str(model))

Output:

As we can see, here we have created a DBOW variant of the Doc2Vec model for a distributed bag of words. Let’s check how it is doing the document embedding by inferring the vector for a new document.

vector = model.infer_vector(["human", "interface"])

pprint(vector)

Output:

The above output can be compared with other vectors via cosine similarity. The model we trained is based on the iterative approaches of approximation so there can be a possibility of repeated inferences of the same text to be different vectors. The above output also gives the vector representation of the words that we used with the infer_vector module.

Final words

In the article, we have discussed document embedding and found that Doc2Vec models are required to model document embeddings from word corpus. We also discussed that the distributed bag of words model is a type of Doc2Vec model that can be used for better performance in our NLP tasks.

References