The future, where everyone will have a digital twin helping us carry out our day-to-day tasks at work and beyond, is not far.

NVIDIA announced the general availability of NVIDIA ACE generative AI microservices to accelerate the next wave of digital humans. Companies in customer service, gaming, entertainment, and healthcare are at the forefront of adopting ACE, short for Avatar Cloud Engine, to simplify the creation, animation, and operation of lifelike digital humans across various sectors.

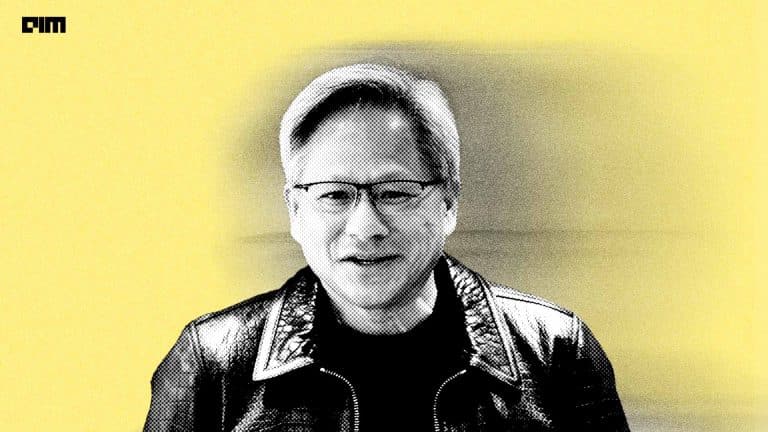

During his keynote speech at Computex 2024, NVIDIA chief Jensen Huang introduced NVIDIA Inference Microservices (NIMs), Avatar-based AI agents capable of working in teams to accomplish missions assigned by humans.

Further, he said that NIMS-based agents will be capable of performing various tasks such as retrieving information, conducting research, or using different tools.

“NIMs could also use tools that run on SAP and require learning a particular language called ABAP. Other NIMs might perform SQL queries. All of these NIMs are experts assembled as a team,” said Huang.

NVIDIA has made available its suite of ACE digital human GenAI tools, including Riva, which has ASR, TTS, and NMT capabilities for speech recognition and translation, Nemotron LLM for language understanding, Audio2Face for facial animation, and Omniverse RTX for realistic skin and hair rendering.

The Good Side of AI Avatars

Most recently, Zoom chief Eric Yuan said that he wants users to stop having to attend Zoom meetings themselves.

He believes one of the major benefits of AI at work will be the ability to create what he calls a “digital twin” — a deep fake avatar of yourself that can attend Zoom meetings on your behalf and even make decisions for you, freeing up your time for more important tasks like spending time with your family.

Meanwhile, LinkedIn co-founder Reid Hoffman recently created an AI twin of himself and discussed various topics on AI in an interview with it. He said he deepfaked himself to see “if conversing with an AI-generated version of myself can lead to self-reflection, new insights into my thought patterns, and deep truths.”

Similarly, executive educator and coach Marshall Goldsmith is creating an AI-powered virtual avatar of himself as a one-of-a-kind endeavour to share his skills and preserve his legacy for years. MarshallBoT, an AI-powered virtual business coach, is based on GPT-3.5 from OpenAI.

Aww Inc, a virtual human company based in Japan, introduced its inaugural virtual celebrity, Imma, in 2018. Since then, Imma has become an ambassador for prominent global brands in over 50 countries.

Building on this success, Aww is now poised to integrate ACE Audio 2Face microservices for real-time animation, enabling a highly engaging and interactive communication experience with its users.

OpenAI recently launched GPT-4o, featuring a voice function that makes it ideal for voice-controlled computing. In a new demo, the company demonstrated the model’s ability to generate multiple voices for different characters. Additionally, NVIDIA showcased GPT-4o’s capabilities by creating a digital human that interacted seamlessly with a real person.

“We’ve had the idea of voice control computers for a long time. We had Siri, and we had things before that; they’ve never felt natural to me to use,” said OpenAI’s Sam Altman in a recent podcast. He used the term ‘model fluidity’ to describe GPT-4o’s capabilities, which lets users ask it to sing, talk faster, use different voices, and speak in various languages.

Meanwhile, Meta and Apple have also developed photorealistic avatars for Quest and Vision Pro, respectively. The potential for AI avatars as NPCs in the gaming industry is immense, allowing players to interact with them using natural language to enhance their experience. It would be like creating a virtual world within the game.

AI is Becoming More Intelligent Than Humans

Recently, a video went viral demonstrating a reverse Turing test. The experiment took place in a VR train compartment with five passengers – four AI and one human. The passengers included AI representations of Aristotle, Mozart, Leonardo da Vinci, Cleopatra, and Genghis Khan. Their task was to determine which among them was human through a discussion.

As the conversation progressed, Genghis Khan’s responses focused solely on conquest, lacking the expected nuance of a historical figure. The AI passengers quickly identified this discrepancy, their algorithms detecting the superficiality in his answers.The reverse Turing test is becoming increasingly relevant as AI systems become more sophisticated and capable of convincingly mimicking human behaviour. This is where tools like Worldcoin come into the picture creating a secure, privacy-preserving digital identity that does not store personal information, but rather a cryptographic hash of the biometric data.