After Stanford University launched ChatGPT clone Alpaca for $600, a team from UC Berkeley, CMU, Stanford, and UC San Diego and trained by fine-tuning developed Vicuna-13B, an open-source alternative to GPT-4, which reportedly achieves 90% of ChatGPT’s quality, and the cost of training the model was around $300. The model has been fine-tuned using LLaMA and has also incorporated user-shared conversations gathered from ShareGPT.

Check out the GitHub repository here.

Additionally, the model weights were made publicly available along with the launch. The latest innovation is expected to garner significant interest from businesses and individuals looking to leverage cutting-edge technology for natural language processing applications.

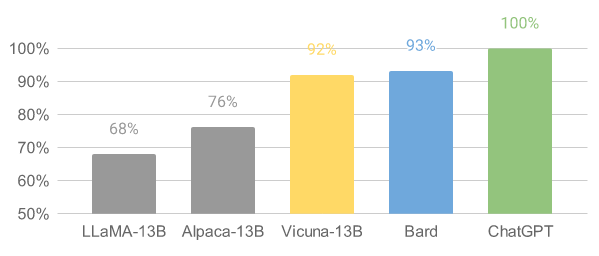

Early assessments using GPT-4 as a judge have demonstrated that Vicuna-13B attains over 90%* of the quality of both OpenAI’s ‘ChatGPT’ and Google’s ‘Bard’, whilst surpassing other models such as LLaMA and Stanford Alpaca in more than 90%* of instances. Furthermore, Vicuna-13B’s performance level compares favourably to other open-source models, including Stanford Alpaca. The results have generated considerable interest in the field of natural language processing, particularly among businesses seeking to leverage the latest advancements in AI. Building an evaluation system for chatbots remains an open question requiring further research, stated the researchers in a blogpost.

The researchers have made some bold claims about its capabilities in natural language processing, and it’s exciting to see how it will stack up against other models like ChatGPT. While both models share some similarities, Vicuna-13B boasts impressive efficiency and customisation capabilities, thereby making it a strong contender in the NLP space.

The contributors behind Vicuna-13B have asserted the model’s capabilities in natural language processing compared to other models like ChatGPT. While the two models exhibit some parallels, the model differentiates itself through its efficiency and customisation features. Industry experts are closely monitoring Vicuna-13B’s performance, anticipating it will set new benchmarks for AI-powered language processing.

Despite its impressive capabilities, the model has certain limitations. For example, it struggles with tasks that require reasoning or mathematical computation, and there may be some shortcomings in ensuring factual accuracy in its outputs.

Additionally, the model is yet to be thoroughly optimised to guarantee safety or mitigate potential toxicity, or bias. To address these concerns, the developers have implemented OpenAI’s moderation API to filter out inappropriate user inputs in their online demo.

[Update: April 6, 2023 | 16:00] Previously, the article erroneously mentioned that the model was launched by Hugging Face. It has now been updated to show that it has been developed by a team from UC Berkeley, CMU, Stanford, and UC San Diego.