In a recent post on X, analyst and author Dion Hinchcliffe, mentioned that in enterprise-grade AI, retrieval augmented generation (RAG) integrates database data with generative LLMs to produce highly relevant and contextually rich responses.

This method enhances the depth and accuracy of AI outputs, resulting in more precise and insightful responses by combining the strengths of both databases and LLMs.

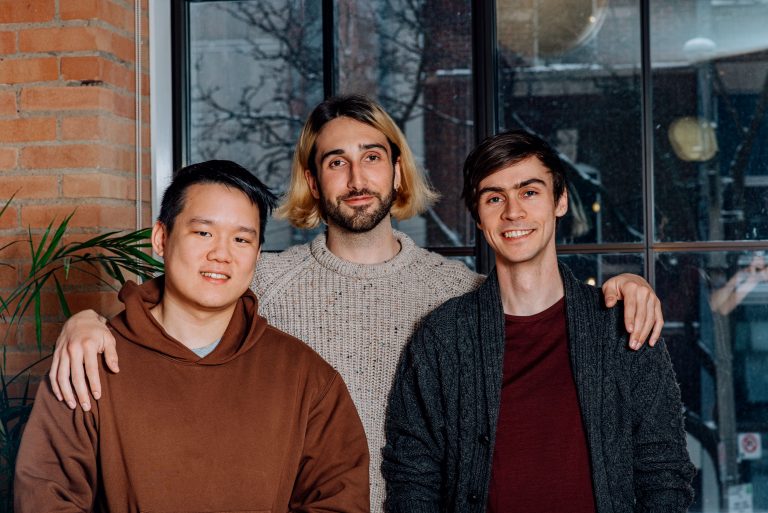

Echoing the same was a post by LlamaIndex CEO & co-founder Jerry Liu: “A lot of enterprise developers are creating GPT-like platforms for internal users, allowing them to customise the agent to their specific needs through an easy-to-use interface.

“RAGApp is the most comprehensive open-source project available for launching a RAG/agent chatbot on any infrastructure, all without writing a single line of application code—perfect for end users!”

A lot of enterprise developers are building GPTs-like platforms for internal users – let internal users customize the agent to their use case through a UI.

— Jerry Liu (@jerryjliu0) May 24, 2024

RAGApp is the most comprehensive open-source project available to spin up a RAG/agent chatbot, hosted on any infrastructure… https://t.co/otgInDlAfz pic.twitter.com/NU6zpizhTa

Why Enterprises are Choosing to ‘RAG’

Hallucinations, rising compute costs, limited resources, and the need for a constant flow of current and dynamic information, have all proven problematic for enterprise-grade LLM solutions.

A user on a Reddit thread said that a key aspect is that fine tuning is a feasible option only if the data source you want to include is finished – RAG can provide information based on changes made yesterday or a document that was created this morning, a fine-tuned system won’t.

Moreover, the most important reasons for enterprises to RAG is the reduction of hallucination and provide more accurate, relevant, and trustworthy outputs while maintaining control over the information sources and enabling customisation to their specific needs and domains.

If we look into techniques to reduce the LLM hallucination via RAG, recently ServiceNow reduced hallucinations in structured outputs through RAG, enhancing LLM performance and enabling out-of-domain generalisation while minimising resource usage.

The technique involves a RAG system, which retrieves relevant JSON objects from external knowledge bases before generating text. This ensures the generation process is grounded in accurate and relevant data.

Many companies aim to integrate LLMs with their data to create RAG applications. For example, Cohere, a leading AI platform for enterprises, a few months ago, introduced command R+, a powerful RAG-optimised LLM for enterprise AI.

RAG or Fine Tune?

Giving an example of the pricing of GPT-3.5, ML engineer and teacher Santiago said, “99% of use cases need RAG, not fine-tuning,” as fine-tuning of the model is more expensive, and both are for different purposes.

On the other hand, fine-tuning enhances LLM capabilities for various applications. It improves sentiment analysis by better understanding text tone and emotion, aiding accurate customer feedback analysis. Additionally, it enables LLMs to identify specialised entities in domain-specific texts, improving data structuring.

However, considering both RAG and fine-tuning, recently a post on LinkedIn by Armand Ruiz, VP of product, IBM, said that fine-tuning and RAG are complementary LLM enhancement techniques.

Fine-tuning adapts the model’s core knowledge for specific domains, improving performance and cost-efficiency, while RAG injects up-to-date information during inference. A recommended approach is to start with RAG for quick testing, then combine it with fine-tuning to optimise performance and cost, leveraging the strengths of both methods for efficient, accurate AI solutions.

He also mentioned, “The answer to RAG vs fine-tuning is not an either/or choice.”

Considerations for choosing between RAG and fine-tuning include dynamic vs static performance, architecture, training data, model customisation, hallucinations, accuracy, transparency, cost, and complexity.

Hybrid models, blending the strengths of both methodologies, could pave the way for future advancements.

Does RAG Happen for Every Enterprise

A recent research paper by Tilmann Bruckhaus shows that implementing RAG effectively in enterprises, especially compliance-regulated industries like healthcare and finance can be tricky. It faces unique challenges around data security, output accuracy, scalability, and integration with existing systems, among others.

“Each enterprise has unique data schemas, taxonomies, and domain-specific terminology that RAG systems must adapt to for accurate retrieval and generation,” noted Bruckhaus, touching upon customisation and domain adoption.

However, the paper also mentioned, addressing these challenges may benefit from techniques in semantic search, information retrieval, and neural architectures, as well as careful system design and integration.

What’s Next

As compared to other methods and techniques, RAG seems to be very useful for enterprises, however, compliance-related industries face some challenges to incorporate RAG, but that can be tackled through semantic search techniques, hybrid query strategies for optimising retrieval, experimental evaluation, and so on.

RAG is advancing day-by-day and will continue to improve, eventually becoming more beneficial for enterprises.