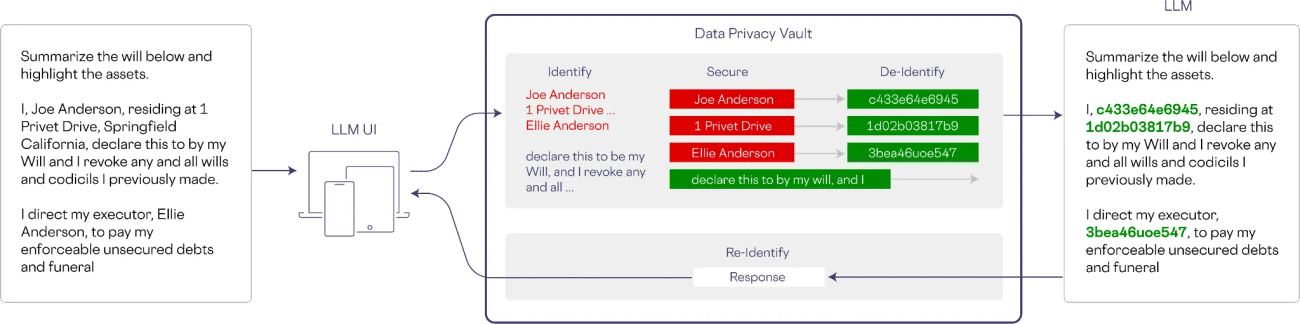

“Where’s the delete and undo button in LLM?” asked furious Anshu Sharma, co-founder and CEO of Skyflow, who was introducing the concept of LLM vaults in a recent interaction with AIM.

“LLM vaults are built on top of the proprietary detect engine that detects sensitive data from the training datasets used to build LLMs, ensuring that this data is not inadvertently included in the models themselves,” he added, saying the company has built a proprietary algorithm to detect sensitive information in unstructured data that is being stored in the vault.

The Need for LLM Vault

Sharma has a stronger reason to believe so. “While storing data in the cloud with encryption will safeguard your data from obvious risks, in reality, we need layers. The same data can not be given to everyone. Instead, you can have an LLM vault that can identify sensitive data while inference and only share non-sensitive versions of the information with LLM,” said Sharma, suggesting why the LLM vault matters.

The vice president of Amazon Web Services, Jeff Barr, also mentioned that “The vault protects PII with support for use cases that span analytics, marketing, support, AI/ML, and so forth. For example, you can use it to redact sensitive data before passing it to an LLM”.

Gokul Ramrajan, a tech investor, explained the importance of LLM vaults, saying, “If you think protecting private data was hard with databases, LLMs make it even harder. “No rows, no columns, no delete.” What is needed is a data privacy vault to protect PII, one that polymorphically encrypts and tokenises sensitive data before passing it to a LLM”.

A few weeks ago, when Slack started training on user data, Sama Carlos Samame, the co-founder of BoxyHQ, raised a similar concern for organisations that are using AI tools and why they should have LLM vaults to safeguard their sensitive data.

Going Beyond LLM Vault

The likes of OpenAI, Anthropic and Cohere are also coming up with innovative methods and features to handle the data of a user and enterprise. For instance, if you are using OpenAI API, then your data won’t be used to train their model. Also, you can opt out of data sharing to ChatGPT. Privacy options like these somewhat eliminated the need for LLM Vaults.

Anthropic, on the other hand, have also incorporated strict policies on how they use user data to train their model and unless a user volunteers to do so or a specific scenario comes in where they collect user data.

Meanwhile, Cohere has collaborated with AI security company Lakera to protect against LLM data leakage by defining new LLM security standards. Together, it has created the LLM Security Playbook and the Prompt Injection Attacks Cheatsheet to address prevalent LLM cybersecurity threats.

There are other techniques like Fully Homomorphic Encryption (FHE) which allows computations to be performed directly on encrypted data without the need to decrypt it first. This means the data remains encrypted throughout the entire computation process, and the result is also encrypted.